The digital age is transforming children into a highly dependent species, with social media playing a pivotal role in their lives. While technology offers opportunities for education and creativity, it also exposes young people to significant risks that can profoundly affect their mental health and development. According to a 2023 report by the European Commission, between one in three and three in four EU youth spends over three hours daily on social media, a nearly half-hour screen time attacks their well-being. This overexposure, linked to mental health issues such as anxiety, depression, and low self-esteem, underscores the need for a safer and healthier digital environment.

The World Health Organization (WHO) has estimated that in 2022, the rate of engaging with misinformation and harmful content among adolescents had risen by 11%, from 7% in 2018. This growth highlights the negative impacts of digital neglect on mental well-being and daily functioning, particularly among minors. Social media platforms, often designed to capitalize on a young audience’s engagement, expose children to curated content that distorts self-perception. Constant exposure to idealised, curated content can lead to feelings of inadequacy, dissatisfaction, and lack of confidence, chỉ ra communicating the fear that children are caught up in unbalanced and anti-social content.

The need for clearer guidance in protecting children’s digital privacy is crucial. The Digital Protection Act (DSA) framework, although advancing, remains insufficient. Implementing default privacy settings to reduce unsolicited contacts, developing calibrated recommendations systems to avoid harmful content, and enhancing safety controls, such as block/cmuteroring, are essential measures. These measures would prevent children from receiving targeted texts or videos, protecting their privacy and engagement.

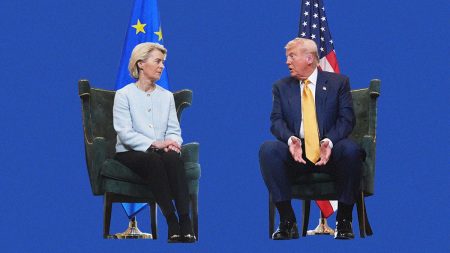

The European Commission’s draft digital protection guidelines aim to establish age verification as a mandatory feature across all platforms, ensuring that children are aware of their legal rights and limitations. enforce enforced age verification would safeguard minors from bypassing age restrictions and ensure platforms offer符合基本福祉 standards. These measures would also enable platforms to enforce EU and national laws regarding the minimum age access to specific digital services, fostering a fairer digital landscape.

The digital age is not only a vulnerability but also a source of frustration for minors seeking a healthier lifestyle. The author and colleagues emphasize the importance of collaboration between parents, educators, and platforms to create a universe that fosters meaningful learning, creativity, and connection. By prioritising age verification, building a reliable and safe digital environment, and addressing the root causes of digital harm, Europe can ensure that children emerge as well-rounded citizens capable of managing their own lives. This call to action calls for a greater commitment to creating a safe andparsed digital future for the next generation.